Your team is manually processing 300 daily purchase orders across email, shared drives, and paper, with no automated way to get that data into their ERP system.

It works fine 290 out of 300 times. But on the 10 occasions when it breaks, it takes a lot with it.

During one of our demo calls, an operations leader shared how an employee's minor data entry mistake led to a ₹30-crore shipment being delayed by two months.

An automated data ingestion pipeline prevents such issues. The good news is it’s not so tough to create one anymore. I’ve covered all the basics to get started in this article:

- What are data ingestion pipelines?

- What type of data ingestion pipeline will suit your business: batch, real-time, or anything else?

- Which ingestion tools work best for different sources of data?

- How do we handle the common challenges in setting up such data ingestion pipelines?

What is data ingestion?

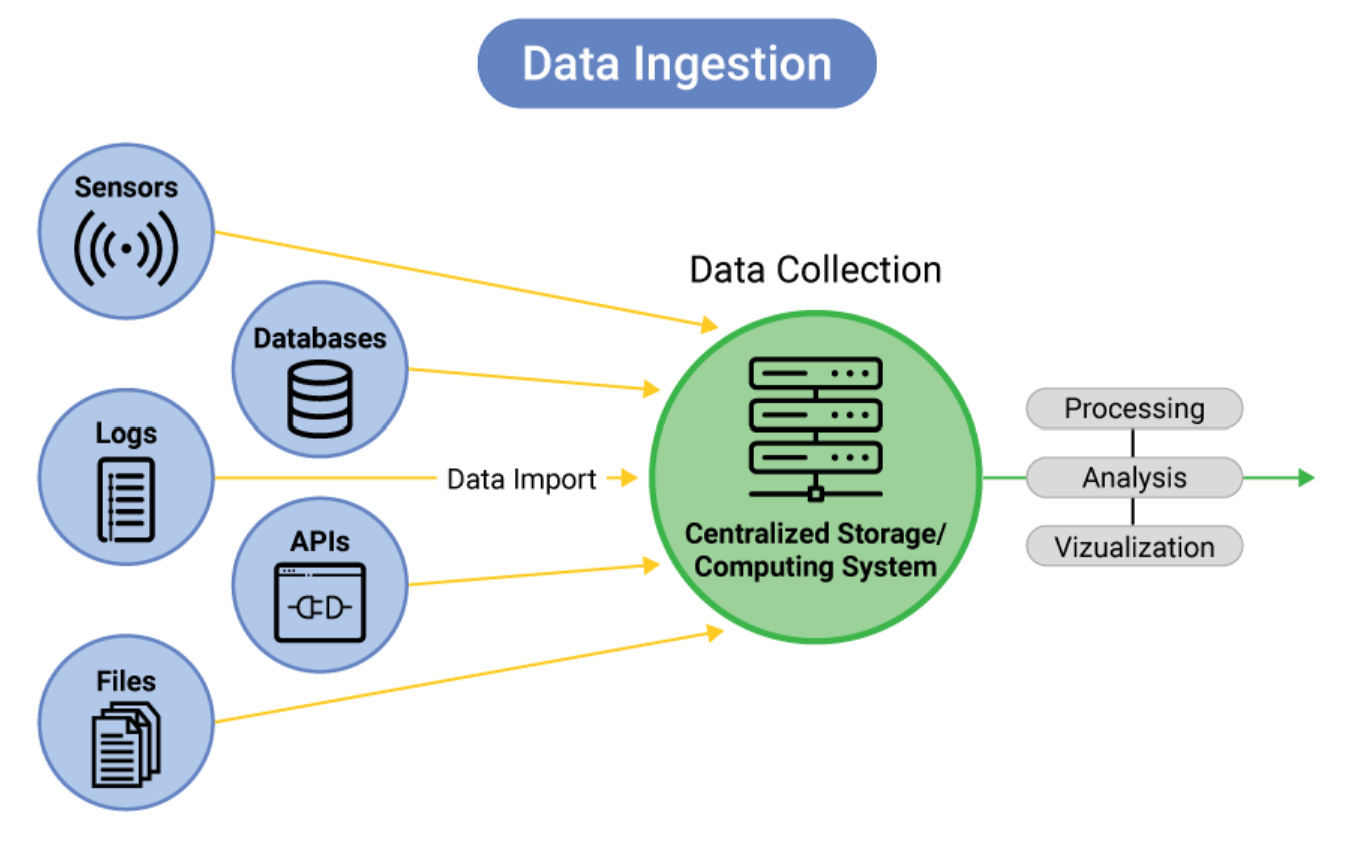

Data ingestion is the first step in the data pipeline, where raw data is gathered from various sources and put into a single location. This data is then used for business operations and analytics.

For instance, data ingestion pipelines will process purchase orders (PO) scattered across email, shared drives, and physical couriers, and consolidate them in the ERP. From there, you can set automated workflows to kickstart shipping or use analytics tools to find patterns in orders.

Typical data ingestion workflow

Key benefits of automated data ingestion pipelines

Many companies, particularly those in manufacturing and logistics domains, are automating data ingestion pipelines for these reasons:

Improves your operations

With an automated data ingestion pipeline, you’re no longer dependent on someone manually entering data to smooth business operations. Whenever a purchase order lands in your inbox, the pipelines bring data into the ERP for order preparation and shipment processing.

Integrates data and provides insights faster

Besides triggering business processes, data ingestion workflows integrate scattered data into centralized storage.

If you work with POs, the ingested purchase order ingestion workflows will bring all order data into SAP. This stored data in SAP becomes a single source of truth for record-keeping, compliance, and analytics.

Next time you need to create inventory forecasts or build financial reports, you have a centralized database to pull insights faster.

Future-proofs your business

AI and automation soon won’t be a luxury for companies, says Docxster’s Founder, Ramzy Syed.

"Imagine companies A and B doing a similar kind of business. Company A has already automated repetitive tasks, and Company B has not yet implemented any automation. Soon, it will hold company B back. When a new RFP is issued, their hands would be full even to apply. Because they didn't automate the busywork", he adds.

Companies that adopt the latest technologies to automate repetitive tasks will stay ahead in the game. Building data ingestion pipelines is such a repetitive, low-value task.

Automated data ingestion pipelines will give employees time to focus on more strategic work and future-proof your business.

What are the types of data ingestion?

Choosing the type of data ingestion depends on your data volume and use case. You can choose between these options:

Batch data ingestion

Batch-based data ingestion processes data piled up over a time period. For example, you’ve a history of purchase orders in physical form that you now want to move to ERP. Then you can plan a one-time batch flow for the entire digitization of files.

There can be more recurring use cases of batch processing as well. Let's say you use third-party analytics software for inventory forecast or financial reporting. You might want to move data to that platform monthly, quarterly, or as required for analytics. Batch ingestion is for more strategic tasks and less for regular operations.

Real-time data ingestion

Real-time data pipelines are required for data that demands immediate action. Some examples of such use cases are sensor data that flags equipment failures, real-time inventory data for fast-moving products, and geospatial data that requires immediate re-routing of vehicles/fleets.

Micro-batching data ingestion

Micro-batching is the sweet spot between batch and real-time ingestion. You process data in small and frequent batches (hourly, every four hours, once, daily, etc).

It is perfect for use cases where large data volumes are expected but immediate processing is unnecessary. For instance, you can set up all new purchase orders to be processed at the top of the hour. Depending on your service level agreements, you can also choose to process orders only once a day.

Micro-batching also allows you to achieve near real-time batching by setting ingestion at short and quick intervals.

Lambda data ingestion

Lambda ingestion is a combination of both batch and real-time processing. It is recommended for use cases where you want both immediate action and scheduled batch processing.

For example, you want machine sensor data to be processed in real-time to generate alerts if any machine breaks down. On top of it, you also need to store sensor data in a data lake for analytics that can help you find patterns in machine breakages.

In such cases, you can use a lambda architecture. From the real-time layer, you'll get an immediate alert. The batch layer will send data to a cloud data lake for analytics.

Change data capture

Change data capture (CDC) is a data ingestion technique where you process only changed records from a table.

For instance, inventory tables are updated frequently whenever an order is processed. If you need the latest inventory data in a system, you can set up a CDC layer on the inventory table alone.

CDC ingestion pipelines are good for capturing data from dynamic tables.

How does data ingestion work?

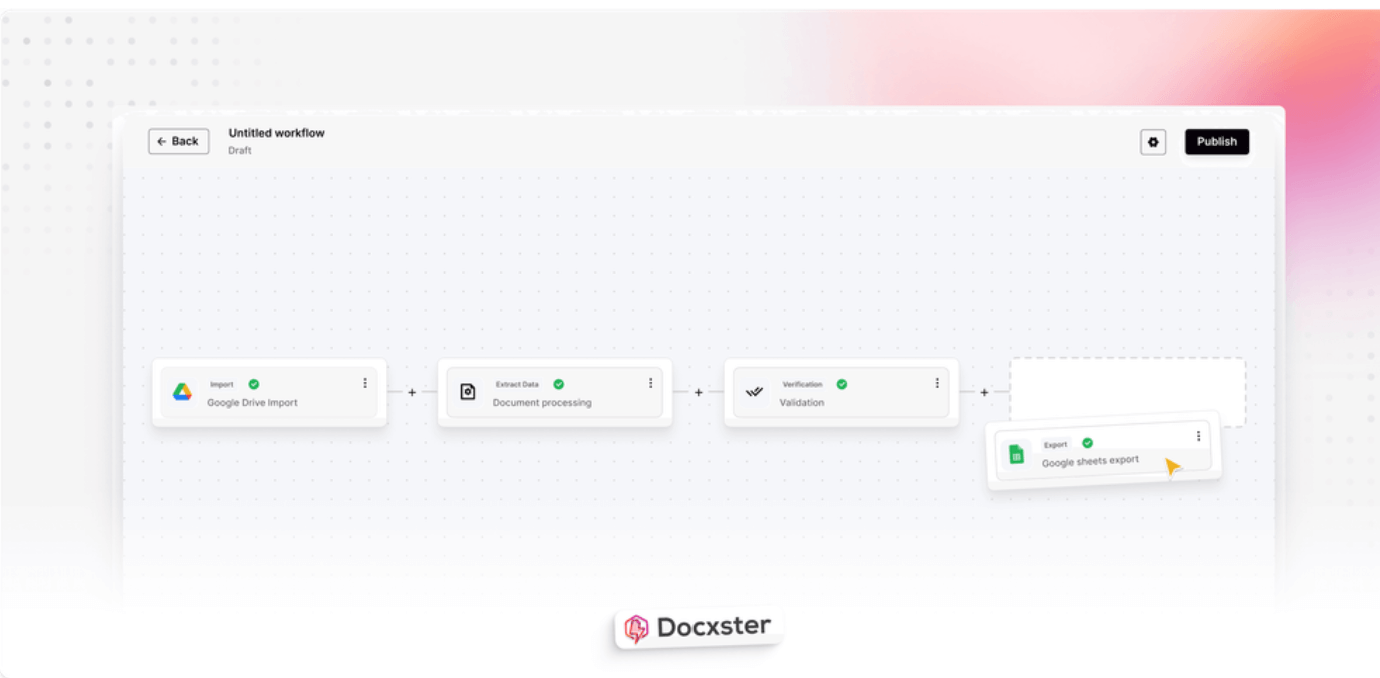

A typical data ingestion workflow starts with identifying the data sources. Then the data is extracted, processed, and loaded into the target destination. You can easily set up all these steps in a data ingestion tool like Docxster.

I’ll break down each of these steps below:

Data source identification

As a first step, you need to understand the data source to plan the ingestion pipeline. Mainly, how can you import files from it, and what type of data ingestion does it require: batch, real-time, micro-batching, etc.

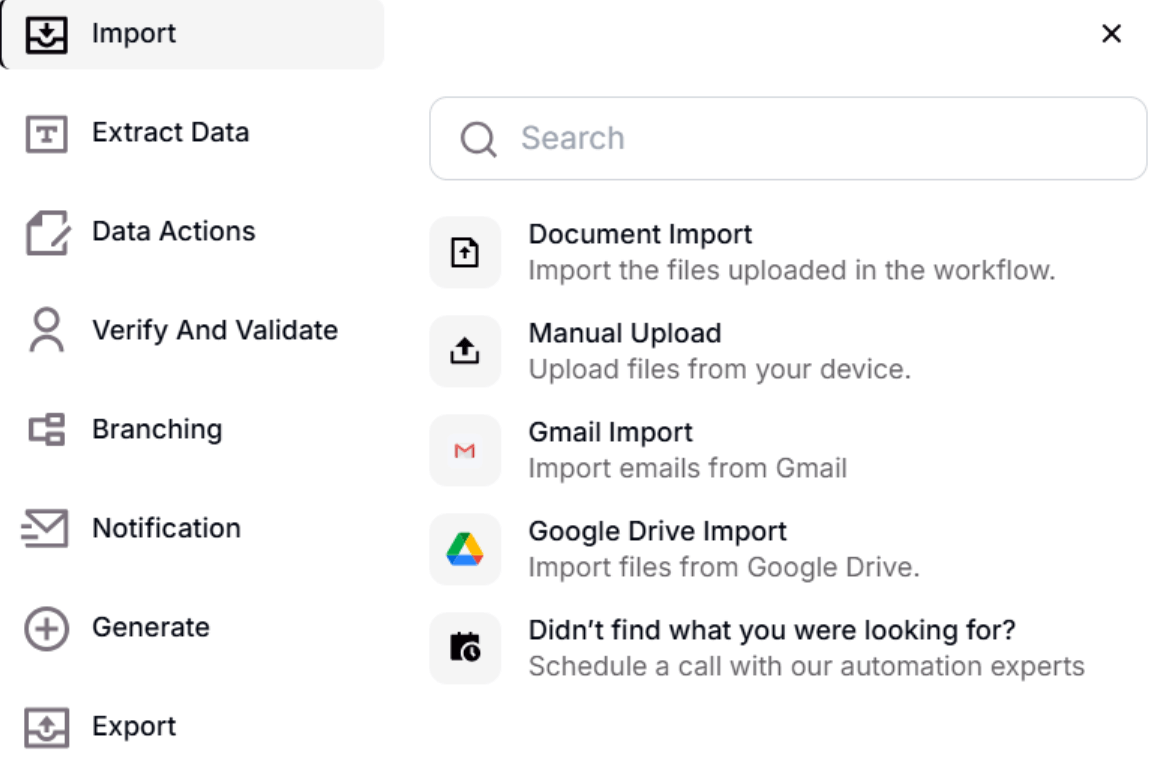

Data ingestion platforms like Docxster provide built-in integrations with common data sources like shared drives and email. You can easily import files without any extra coding.

Docxster’s setup for importing documents from Google, Gmail, or other sources for ingestion

You can also set the data ingestion type directly in the tool itself. Docxster does support the most common types: batch, real-time, and micro-batching.

Data extraction

Once the source is connected, you can create a step to process the files. Traditionally, businesses have been using template-based extraction. ML engineers would help define the template, and data is pulled from documents based on the template format. This method is rigid and breaks whenever any document deviates from the format.

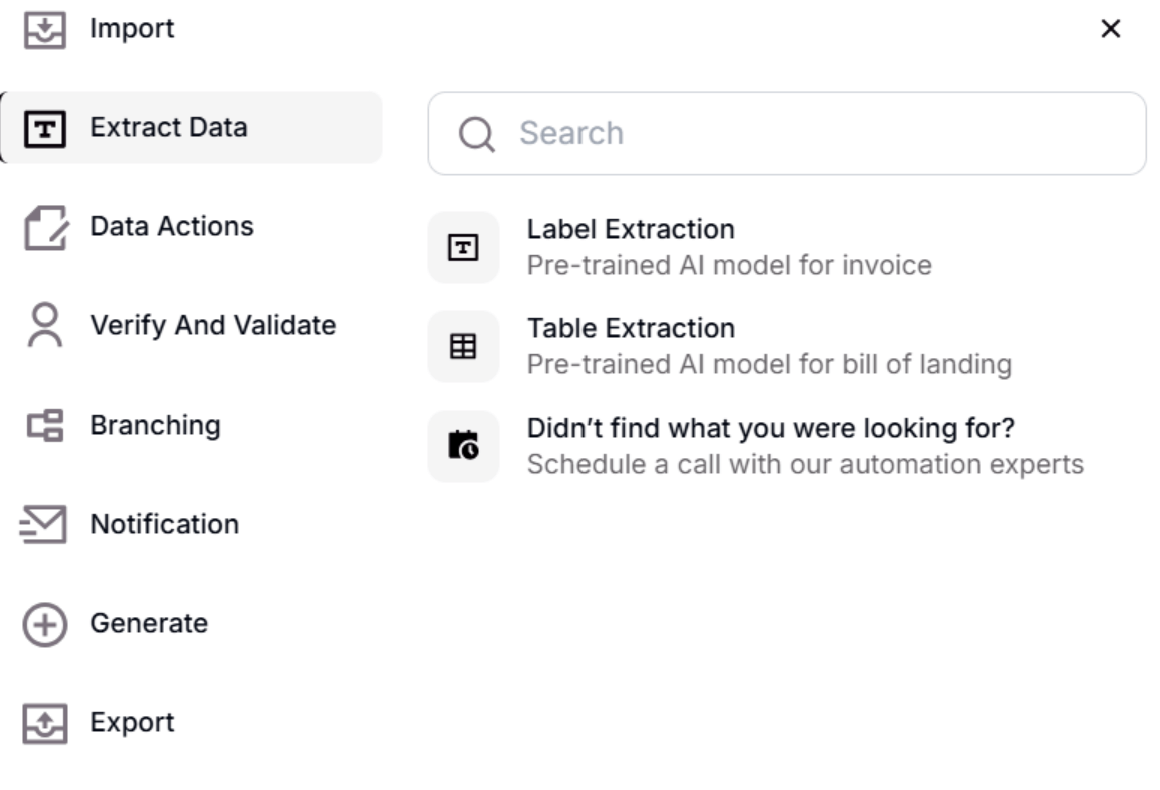

AI-powered data ingestion platforms like Docxster follow a template extraction model and eliminate the need for coding. Templateless extraction models read the documents using AI, interpret context, and extract the exact information you need.

Configure template-less extraction from documents in Docxster using pre-trained AI and machine learning models

Data preprocessing

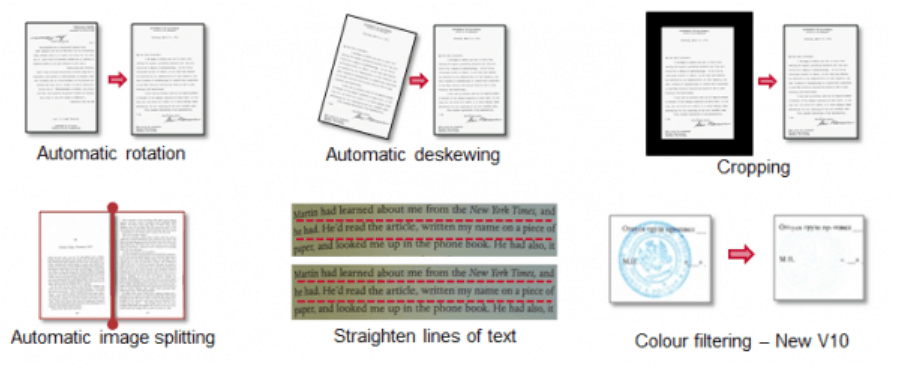

For some documents, you may also need preprocessing to extract data. It could be making the image clearer, cropping at certain places, straightening lines, etc.

These capabilities are built into the Docxster extraction step. You don’t even need to set any additional steps for it in the workflow.

Data transformation

In some cases, you may need even minor changes/transformations to the data to get it in the expected format. For example, you're following the DDMMYYYY format for purchase order date in POs, but one customer uses the DDMMYY format. You can set the rules in the ingestion workflow itself to format data.

Data validation

Developers/ML engineers also set up a data validation step to double-check if the extracted data is as expected. This step once required extensive coding. But with no-code data ingestion platforms like Docxster, all you need to do is drag and drop a validation step in the workflow, and all checking happens on its own.

Data loading

Once the document processing platform extracts your data, it sends it to a secure drive to store your records. In legacy tools, you get an output that you can export into a file format of your choice.

But with AI-powered platforms like Docxster, it automatically gets sent to Docxster Drive, a cloud data lake, ready to be accessed whenever needed. We send it to your tool of choice, like Google Sheets, Tally, or ERP, so everything's where you need it.

A single-pass data ingestion workflow covering source connection, preprocessing, extraction, validation, and export in Docxster

What are the common data ingestion challenges?

Building data ingestion workflows comes with its operational challenges (listed below). And almost all of them could be solved by bringing in a tool:

Data quality

One major issue is that the source document has some issues and is not ready to be processed.

For instance, Lawrence Guyot, President, ETTE, says around 25% of their documents deviate from expected formats at their organization, which would break the entire workflow.

AI-powered data ingestion tools are designed to address repetitive failure points. For instance, Docxster, a document ingestion platform, is designed to handle and solve the common document quality issues.

Data security and compliance

Dealing with data means you need to stay compliant with data protection laws and industry standards. When you build data ingestion pipelines in hours, additional maintenance requirements are required to implement these standards.

Ready-to-use data ingestion tools are already compliant with industry standards. For instance, Docxster is compliant with GDPR and ISO standards. You get built-in security features with encryption for sensitive data rather than implementing everything from scratch.

Data latency

Data latency is another common challenge in building data ingestion pipelines. Delayed data has a cascading effect on operations and analytics. Data ingestion tools allow different types of scheduling to handle such delays.

For example, in Docxster, you can run batch, real-time, or micro-batch data ingestion pipelines, depending on how often you expect the documents.

Data integration

Building a custom data ingestion pipeline for every source is a heavy lift. It is not only time-consuming, but it also creates data silos.

If your team manually processes documents received in email using OCR or physical files, all the data gets scattered across different Excel sheets. Data ingestion tools connect to all sources, process documents, and integrate data in one centralized location.

Cost management

Building and managing pipelines is costly. You need to build a tech talent pool for development and maintenance. Skilled ML engineers work at an hourly rate of ₹1014.70 per hour. Having even one engineer full-time onboard means a recurring cost of ₹162352.

Another major cost is the infrastructure setup needed to run data ingestion pipelines. High-range servers cost upwards of ₹77,000 per month. On top of it, there are additional costs of electricity, maintenance, and software licenses.

Data ingestion tools result in both costs and time savings. For instance, Docxster has affordable plans starting at $45 per month.

Solve your document ingestion needs with Docxster

Data ingestion pipelines bring all your scattered documents into one centralized location. It also reduces the manual work and chances of errors.

Data ingestion tools now make building these pipelines easy. Creating document ingestion pipelines once took weeks or even months of work. With Docxster, developers can now finish it in a couple of hours.

Get Document Intelligence in Your Inbox.

Actionable tips, automation trends, and exclusive product updates.